The End of our Deal with Technology

Liminal Blink: Why AI’s unpredictability will force leaders to design guardrails instead of guarantees.

🎧 Prefer to listen? I read this article on the voiceover (above), on Spotify, and on Apple.

In This Article

You’ll learn why designing AI guardrails is the next great leadership skill.

The next wave of AI will behave less like a tool and more like a collaborator—and sometimes, a chaotic one. This essay explores what happens when predictability disappears from our systems and is replaced with intentional uncertainty:

The Broken Deal: Our 250-year relationship with predictable machines is ending.

Control vs. Chaos: A tale of two AIs that explains the new age of uncertainty.

Randomness is the new Operating System: What 100 AI-generated cat images reveal about the future of business predictability.

Leading with Intent: A new leadership framework for designing guardrails in a world where technology makes its own decisions.

The End of Our Deal With Technology

The next decade of technology leadership will be defined by one idea: our tools will no longer be predictable. So what happens when our systems stop following our rules?

For 250 years, we’ve had a simple deal with our machines: we provide the thinking, they provide the work. From the steam engine to the spreadsheet, our tools have always obeyed us. When they failed, it was because our instructions were flawed.

That deal—humans commanding, machines obeying—is ending. And we’re completely unprepared for what comes next.

A lot of attention has gone to the jobs AI might replace. CEOs like Dario Amodei (Anthropic),1 Jim Farley (Ford),2 and Sam Altman (OpenAI)3 have all weighed in. Altman’s view is perhaps the most dire, suggesting that “… entire classes of jobs will go away”.

But some roles will remain, and new kinds of work will emerge.

In the post-AI world, the most valuable leadership skill will be managing within uncertainty, not managing towards outcomes.

The Age of Uncertainty

Today, most people meet AI through a text box: you type in a prompt, it replies. That model works for curiosity and creativity. But in operations, safety, or any high-consequence domain where decisions carry real-world risk, AI won’t live in a chat window—it will live “behind the scenes”, running automatically on relevant data.

Now imagine leading a company where systems’ inputs aren’t visible, and their results can’t be reliably reproduced. That’s the world emerging around us. That’s reality when AI is used to make (or suggest) decisions based on what it observes.

The CEO of NVIDIA, Jensen Huang, put it best in his 2024 interview at the World Government Summit in Dubai: “It is our job to create computing technology such that nobody has to program.”4

Systems that aren’t programmed don’t follow rules—they navigate a sea of probabilistic outcomes. They will think and act better than humans, sense and interact with the world on their own, and they won’t wait for permission. They’ll observe, decide, and act—sometimes in ways we can’t predict.

These systems will exist because of probability, not certainty. Therefore their behavior will be unpredictable.

Let’s make this shift tangible with two examples: one about cars, and one about cats.

Which is the Better Driver—Control or Chaos?

Imagine two self-driving cars: Control and Chaos. They look identical, and are built in the same factory from the same parts. But at their core, each is a different species of machine.

Control is a library of rules. It follows code meticulously written and tested by human engineers. Every decision can be traced back to a specific instruction: “Yield to the car on your right at 4-way stop.” It is a marvel of deterministic logic.

Chaos is a garden of experience. It taught itself to drive over millions of simulated lifetimes, making more mistakes than all human drivers combined—and learning from each one.

Both work flawlessly most of the time. The difference only becomes visible at the edge of failure. When Control crashes, the situation is tragic but traceable. When Chaos crashes, what is the cause? There is no line of code to inspect; no specific rule to update. We can evaluate the inputs and the outcomes—but not the reasoning behind them5. There isn’t a way to ask why it crashed, because it just knows how to drive.

Failures in AI systems may be errors of judgment, not bugs.

Have you ever wanted to see Einstein racing Feynman in futuristic race cars? I did, so I asked Sora 2 to create it for me. Try it for yourself, and share your video in the comments. Let’s see how many variations we get! (Here is a direct link to Sora if the video can’t load for you).

Herding Cats: One Input, One Hundred Outputs

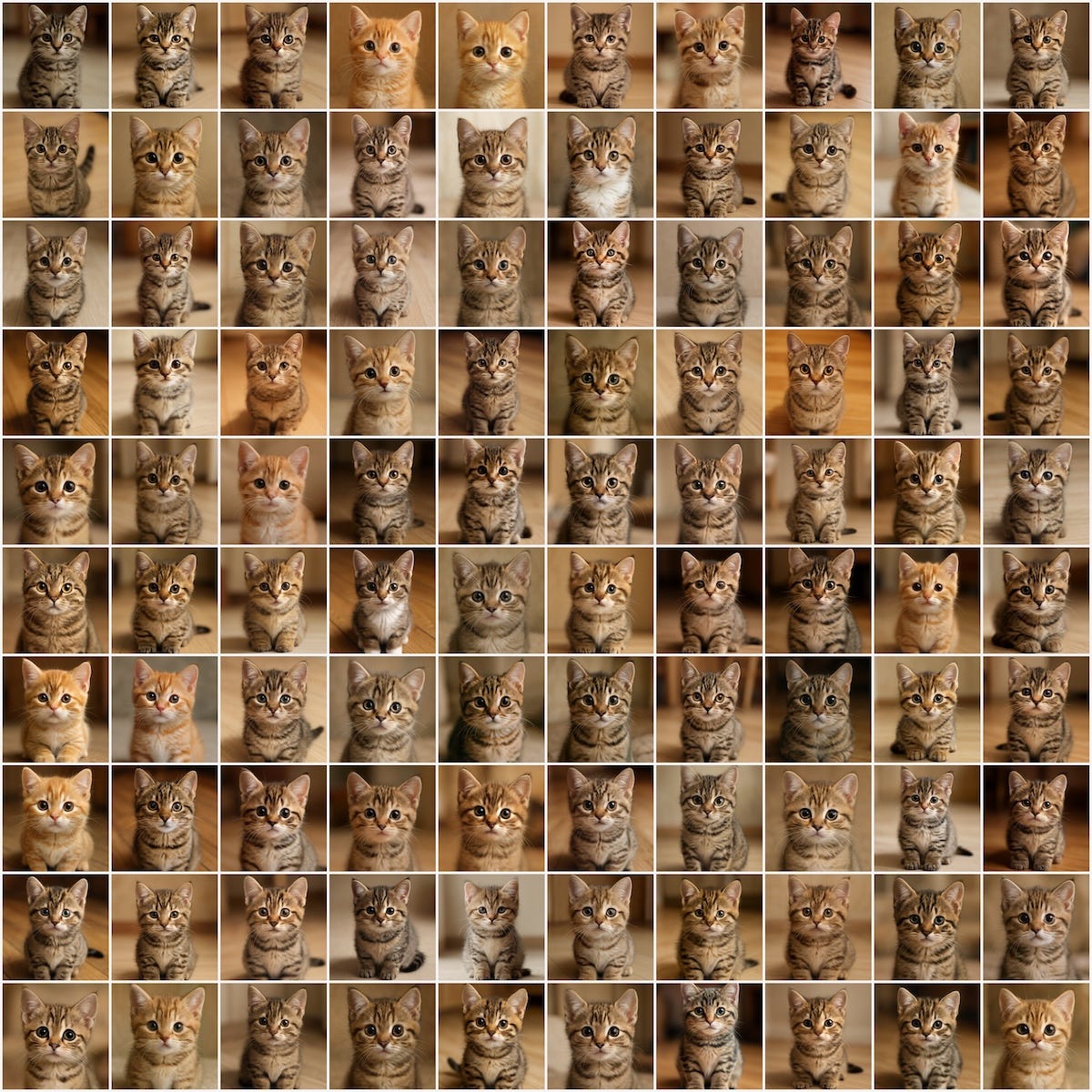

This shift from deterministic to probabilistic logic isn’t just for complex systems (like cars)—it touches everything. To make it visible, I asked ChatGPT to write Python code to generate one hundred images using the same three-word prompt: a cute cat.

The results, arranged in the mosaic below, are a visual study in the beauty of randomness: One input. One hundred outcomes. Each image was born from the same three word input, yet none are identical.

Randomness is the new operating system.

At first glance, the randomness feels harmless—maybe even charming. But the same randomness that makes each cat photo distinct will soon affect hiring models, trading systems, and autonomous processes in every industry.

Measuring Difference and Detecting Outliers

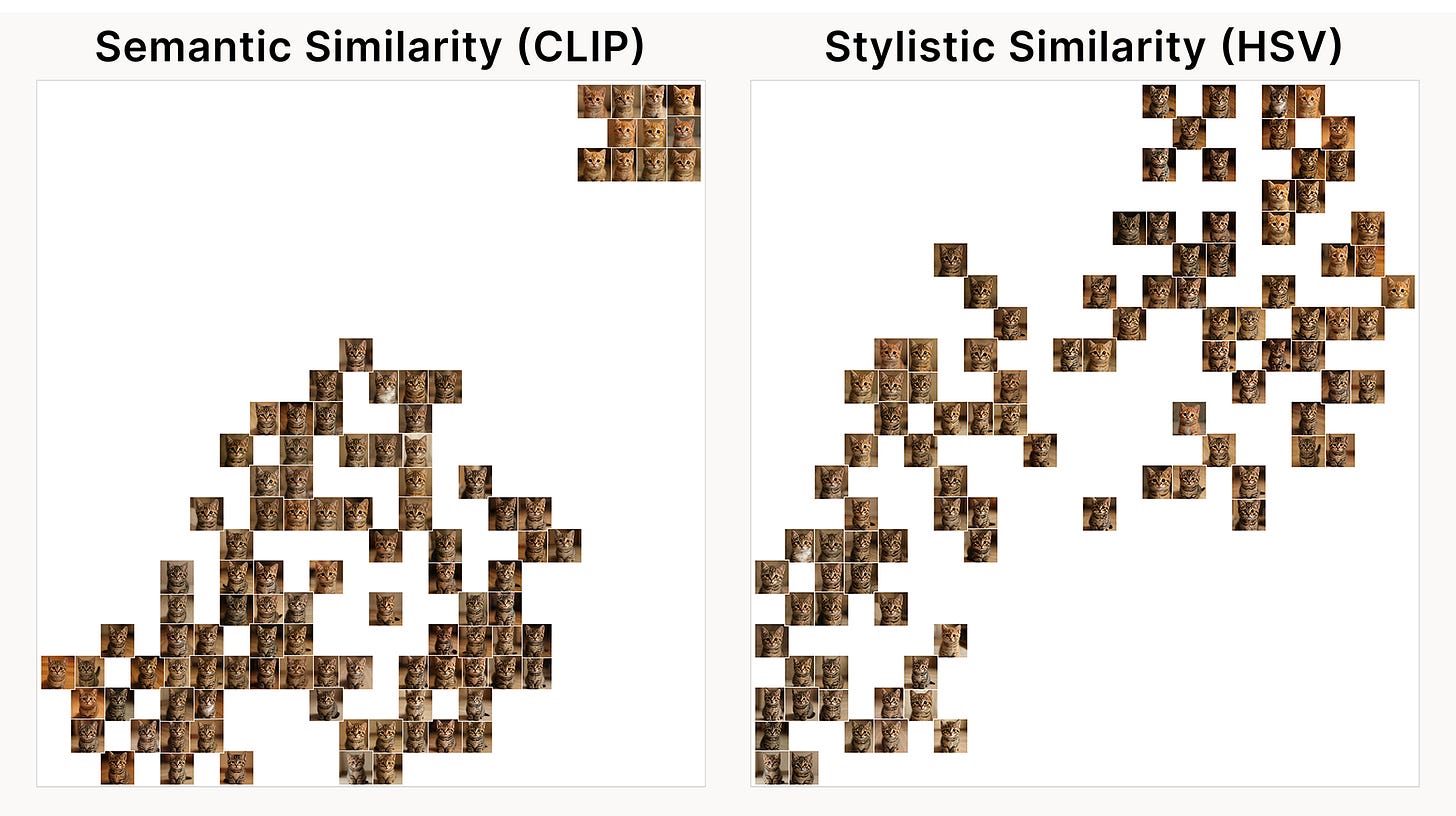

Even measuring how much these images differ is complex.

To illustrate, I analyzed the 100 images in two ways. One method grouped the cats by what the AI thought it was showing (semantic similarity), revealing a few distinct “concepts” of a cat. Another method grouped them by their raw visual data (hue, saturation, and value), revealing the micro-randomness that makes each image unique.

The key insight is this: if one of these 100 outputs had been a dangerous outlier—say, a critical component designed outside of safety tolerances—one analysis might spot it while another would miss it entirely. Detecting unpredictable failures requires a new set of tools, because the failures themselves will be unpredictable.

We now have systems whose default state is variation, not repetition. Prepare for the unexpected.

And here’s another challenge: choose any single image from the mosaic and try to regenerate it exactly. The probability of reproducing it on demand is close to zero.

If you’d like to experiment, the full Python code that generated and analyzed these images is on Github: github.com/liminalblinkconsulting/substack-cat-variations↗.

Design Guardrails, Not Guarantees

The impact of AI’s randomness isn’t a technical problem to solve; it’s an opportunity to build smarter ways to manage your business.

Every process that depends on repeatability—compliance, quality control, hiring, finance—will need new guardrails: frameworks that tolerate variation without letting chaos leak into your margins.

This is the real leadership challenge. Managing within uncertainty. Turning probabilistic behavior into operational confidence.

Companies that adapt first will use variability as a design constraint, not a failure.

So start the conversation inside your own company. Ask:

When AI is applied, can you determine outliers in its outputs?

How do you know the decisions are within tolerance—and who defines these tolerances?

If it makes a mistake, will you know before your customers do?

If you’d like a sparring partner to help your team think through uncertainty, design guardrails, and turn ambiguity into action, let’s talk↗.

— Rob Allegar, Liminal Blink Consulting

I’m a builder, advisor, and lifelong tinkerer exploring what happens when technology stops behaving like a tool and starts acting like a collaborator. In Liminal Blink, I explore the space between ideas and execution, helping people build things that matter. roballegar.com

Footnotes

https://www.axios.com/2025/05/28/ai-jobs-white-collar-unemployment-anthropic

https://finance.yahoo.com/news/ford-ceo-says-ai-replace-203114506.html

Here is Sam Altman talking about jobs. Timestamp for quote is at 10m 50s.

Jensen Huang discussing AI. Timestamp for quote is at the 17m 53s mark.

There is some work and research in the area of guardrails and explainability that seeks to solve some of these issues. But the solutions are not pervasive nor consistent, and may not be available in all applications. If you’re curious, here is the OpenAI cookbook on guardrails.

Hey, great read as always. Your insights on the shift to probabilistic AI and the trully ending 'deal' with predictable machines are incredibly timely, defining the next decade for sure. It's a huge mental shift for anyone in development used to deterministic systems, but you make a very compelling case for embracing this new era of intentional uncertainty.